By Ctein

Mike's post some time back about the deficiencies of CD audio had more to do with photography than you might think. Audiophiles have long been aware that the 44KHz sampling rates for digital sound are not adequate for full fidelity. Mathematically-savvy audiophiles and signal specialists even understand why.

Only a few folks realize these problems occur in digital imaging (both in-camera and scanning film) as well.

The problem lies in the popular misunderstanding of the Nyquist-Shannon criterion. That's the assertion that in order to record frequencies of X, you must sample at 2*X. It even seems intuitive; you need two pixels to convey a line pair, one pixel for white and one pixel for black.

The problem is that the mathematical theorem doesn't apply to the real world. For the math to work, there can be no spatial (or audio) frequencies of X or greater in the image (or signal) you're trying to digitize. The samples you take must be instantaneous. Finally, to accurately reconstruct the original signal from the samples, you must do an infinite amount of calculation.

The third problem is the lesser one. The first two are killers. The only frequency-limited signals are near-constant ones. Any signal that varies rapidly in space (or time) has frequency components that exceed the sampling limits. Those create false frequencies when you reconstruct the image. Realistically, you're safe if the signal is constant for 15 or 20 constant wave cycles, but most real world images (and audio) have content that changes a lot faster than that.

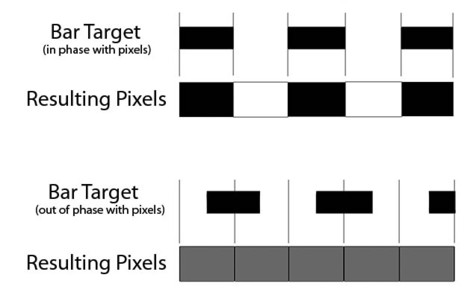

Audio can approximate instantaneous samples. Photography gets it wrong; a single pixel records the average signal strength over the entire sample window. That creates even more problems. I earlier described a line pair represented by one black pixel and one white pixel. Well, suppose you shift the whole scene by one half pixel (see figure 1). Now each pixel is seeing half a black line and half a white line; every pixel comes out 50% gray. This is not good!

Figure 1. Two pixels can't unambiguously resolve a line pair. When the

white and black bars are lined up with the pixels (top figure), the

pixels can record the bars with 100% clarity. But, when the bars are

90 degrees out of phase with the pixels, all the pixels will see half a

white bar and half a black bar and report a uniform gray.

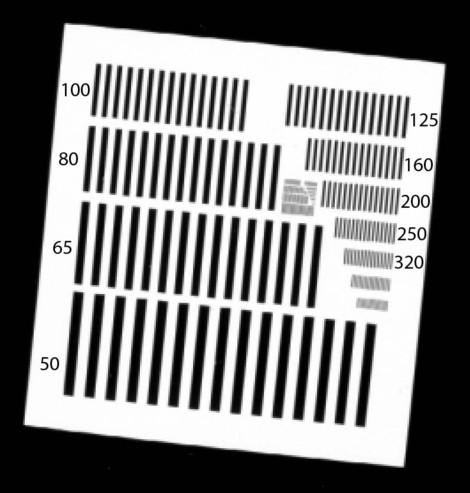

Most of you have seen this in action; it's the moire patterns you get when the fine detail you're trying to photograph approaches the pixel size (figure 2). Putting it in mathematical terms, phase differences are getting confused with amplitude differences.

Figure 2. Sampling failure in action.

This resolution target was scanned at 300 ppi. The scan indeed

"resolves" around 300 lines (150 line pairs) per inch, but there's

substantial moire, inversion and distortion because of aliasing.

It would be a lot cleaner, although not perfect, at half that

frequency—150 lines per inch.

You don't notice it much with randomly oriented fine detail, but the distortions still exist. Fortunately our eyes aren't very sensitive to them; unfortunately, our ears are. That is why digital photographs can look so subjectively good and digital audio sound so subjectively bad.

The real-world fix is "oversampling" (really, it's merely sufficient sampling). Sample at four times the frequency you want to record and it's pretty good. Sample at 8 times and it's really good. But sample at the Nyquist-Shannon limit of only two times that frequency? Don't expect "high fidelity"; it's simply not possible.

___________________

Ctein

Ctein,

At the risk of sounding like a fanboi, I really enjoy how your posts meld science/engineering and the art.

Posted by: Peter | Saturday, 01 September 2007 at 03:16 PM

"Audiophiles have long been aware that the 44KHz sampling rates for digital sound are not adequate for full fidelity. Mathematically-savvy audiophiles and signal specialists even understand why."

As an electrical engineer who's worked in the audio industry I can say that it depends on the definition of "full fidelity". Any digital representation of an analog signal will differ from the original, both mathematically and in practice. The issue is what magnitude of difference you're willing to accept as being good enough. I personally designed a blind listening test and had some "golden ears" take it, and at least in this test their predictions as to which audio sounded better were completely off.

Posted by: Michael Czeiszperger | Saturday, 01 September 2007 at 04:57 PM

The inequality in the Nyquist theorem is a strict one - the sampling rate has to be greater than but not equal to the bandwidth limit.

A square wave will never be bandwidth-limited, as its fourier components are to the order of 1/n for the nth harmonic. The Nyquist reconstruction would exhibit the Gibbs phenomenon, but only be different from the pure square wave by a signal that is higher than the sample frequency. Whether the ear or the eye is detect a difference between A and A+B when they cannot detect B itself is a matter of debate, of course.

Posted by: Fazal Majid | Saturday, 01 September 2007 at 05:07 PM

Dear Fazal,

Quite so! For the sake of brevity, I didn't get into the strict inequality-- technically quite correct. Probably should have alluded to it, since popular error is that 2X sampling is exactly correct.

Also correct on differences between sine and square waves. For audio, these points are important. For photography, with its integrating pixels, you can redraw my diagrams using a sine wave of period slightly greater than 2 pixels. You'll see that substantial distortions still arise, in the form of long period beats, as the signal drifts in and out of phase with the pixels.

pax / Ctein

Posted by: Ctein | Saturday, 01 September 2007 at 06:09 PM

Well.. yes and no. Speaking of correct resolution of the sound at 22kHz - yes, the 44 kHz sampling is not enough even if we assume that 22kHz we capture is pire sine form and we reproduce it as a sine form. However, I do not know a person around me (other than myself) who would recognise the difference between 10kHz and 12kHz or even hear the 22kHz. I do not remember the exact statistics but I know for sure that for the vast majority the 22kHz of reproduceable range is way beyond of their capability. Why do they reproduce it up to 22kHz? Because the ear is more sensitive to the harmonic distorsions at the lower frequencies. So, they give the public a reasonable compromise: 22kHz is a double of recognizeable range which makes the audible distorsions insignificant. Which makes 44kHz sampling rate quite acceptable.

I was lucky to get to play with a two-million dollar audio lab where I could test myself and choose the sampling rate. Yes, my hearing is sort of unique, I won the bet, no, I can not hear the difference between 44kHz sampled sound and 100kHz sampled sound - this was my lost bet to the guy who wanted to prove me the statements of the previous paragraph..

Posted by: Dibutil | Saturday, 01 September 2007 at 06:43 PM

My working life seems to resolve around sampling, and I think you did a nice job of explaining some interesting sampling-related issues.

Any chance you could follow up with a post about anti-alias filters that camera-makers put in front of their sensors and their impact on images?

Posted by: John Lazenby | Saturday, 01 September 2007 at 06:55 PM

The comparison with high-end audio is good in more than one way. Audiophiles may well be correct that in an ideal world a 44Khz sampling frequency is not enough. But of course, in the real world that sound - however sampled - will almost always be pushed through a cheap integrated amplifier (or tiny, noisy amp-on-a-chip in a computer sound card), forced through some telephone wire and out through a pair of overloaded speakers bought because they sound a lot rather than well. In practical terms, 44.1Khz is sufficient for just about all sound applications out there.

And in the same way, while it's instructive to think about the limits of resolving power of our camera sensors, in reality for all but a few users, the final image will not depend on that factor. Their - our - images will be limited by being processed on a screen that is not perfectly calibrated, or not calibrated at all, in a room with lighting inapropriate for editing and different from when we calibrated the screen; then printed on an inexpensive consumer inkjet, or via a photo processing outlet, alternatively downscaled five to ten times and sent via email or a web page to be viewed by (pretty much guaranteed) uncalibrated monitors in bad lighting.

Yes, just like audiophiles we can and should care about this. But we need to keep in mind, in the back of our heads, that in actual use this is a rather minor thing to worry about. If not, we run the risk of ending up like some audiophiles who can spend a weekend optimizing their titanium speaker feet, but never listen to anything else than a test CD.

Posted by: Janne | Saturday, 01 September 2007 at 08:57 PM

Dear Janne,

Well said!

I wrote about a very small piece of the whole, umm, picture. Obsessing about this to the exclusion of anything else is a lot like being one of those avid amateurs who'd ask for advice on what the very sharpest film was to take on their vacation... and would drop off the rolls at the local drugstore for processing when they got back.

pax / Ctein

Posted by: Ctein | Saturday, 01 September 2007 at 09:53 PM

Ahh, the old oversampling argument. In my more than three decades of processing audio and images digitally, it’s about the only thing that has stayed the same. It used to drive my advisor, Tom Stockham, nuts (look him up on Wikipedia under “Thomas Stockham” if you have never heard of him). Nowadays I find it oddly comforting. It’s nice to have something that remains constant.

Defining the meaning of “high fidelity” is not much easier than defining the meaning of love, but the overwhelming prominence of audio sampled at 44.1 and 48 kHz leads me to believe that great majority of people consider that to be high fidelity.

Pick your favorite sampling frequency and enjoy the music. Go shoot interesting images. As you say, it’s not worth obsessing about it.

Posted by: Randy Cole | Sunday, 02 September 2007 at 12:25 AM

is this wonderul testchart (figure #2 in the ctein article)downloadable from somewhere on the net?

Posted by: Christer Almqvist | Sunday, 02 September 2007 at 01:53 AM

Ctein, do you know of a single test where a high sampling rate music sample is successfully ABXed from a 48kHz sampled one?

From the article:

"The first two are killers. The only frequency-limited signals are near-constant ones. Any signal that varies rapidly in space (or time) has frequency components that exceed the sampling limits."

That's not true, in the real world. If you define band limited as "zero energy outside the band", then yes, it's true. If you define bandlimited as "energy outside the band is well below the noise floor", as makes sense for practical applications, it's not really true.

"Finally, to accurately reconstruct the original signal from the samples, you must do an infinite amount of calculation."

Not true, as long as your signal is time limited. Plus, modern oversampling DACs do an extremely good job of reconstruction with very simple filters. Sure, "perfect" reconstruction is not possible in the real world - but reconstruction where all the distortion products are below the noise floor is well understood.

"Now each pixel is seeing half a black line and half a white line; every pixel comes out 50% gray. This is not good!"

Not if you choose your reconstruction filter carefully, to match the sampling process. Camera sensors essentially average the data over an area - a process which requires different reconstruction filters to delta-type sampling. As Fazal rightfully pointed out - a square wave is not bandlimited, so your example is a little misleading.

"Most of you have seen this in action; it's the moire patterns you get when the fine detail you're trying to photograph approaches the pixel size (figure 2). Putting it in mathematical terms, phase differences are getting confused with amplitude differences."

Indeed. I recently read a camera review where the reviewer complained about Moire artifacts and "loss of resolution" due to the antialiasing filter. Unfortunately - you can't have it both ways.

Janne said:

"But of course, in the real world that sound - however

sampled - will almost always be pushed through a

cheap integrated amplifier (or tiny, noisy amp-on-a-

chip in a computer sound card),"

That's neither true nor accurate. Much as audiophiles love to pour scorn on opamps, the truth is that modern designs are much, much better than any other amplifier technology which has existed in the past, in both linear and non-linear measures.

Sure, tube gear sounds great (I would own some if I could afford it), but it's not objectively better in any measure to a modern high performance opamp based design. In fact, it's a lot worse - and that's the real charm.

Posted by: Marc Brooker | Sunday, 02 September 2007 at 07:28 AM

like one of the posters above, i think it would be interesting to think about the effects of antialiasing filters (and subsequent sharpening) on the system. i strongly suspect that for most situations other than test charts under perfectly bad alignment conditions, these elements do a good job to solve the problems raised in the original post; that is, we get real detail at lower sampling rates than you are suggesting. not that greater sampling isn't nice too.

there was some gentleman who posted on luminous landscape purporting to show that diffraction turned our 16mp sensors into 2mp sensors at f/16, whose argument looked somewhat similar to this. trouble was, in practice (i tested it) he is absolutely wrong.

Posted by: chris | Sunday, 02 September 2007 at 03:05 PM

Hey, people,

The import of this column is NOT about audio. I could care less about the quasi-religious audio quality wars.

I thought people might appreciate the connection from audio to photography; But don't get fixated on a mere passing reference to the former to the exclusion of the latter. What I'm really writing about is photography, OK?

Also, this column is not prescriptive, but descriptive. I'm explaining why it's wrong when people quote the Nyquist limit as gospel and why you don't actually get X lines of resolution from X pixels. Lotsa ways to get 'clean' images, but nothing's gonna give you X for X, no matter that lots of folks believe you can.

Just so's ya knows.

pax / Ctein

Posted by: Ctein | Sunday, 02 September 2007 at 05:12 PM

Dear John,

The anti-aliasing filters in cameras limit the "bandwidth" to minimize aliasing problems. I can't give you a detailed explanation of the consequences, because the blankety-blank Bayer array stuff complicates things. You're not imaging across monochrome pixels but alternating RGBG pixels. When you get spatial frequencies close to the pixel frequency, you get different 'lines' on different color pixels. Gets real messy.

Lloyd Chambers has a lovely photo of this on his web site:

http://diglloyd.com/diglloyd/2007-07-blog.html#20070727Monochrome_vs_Color

pax / Ctein

Posted by: Ctein | Sunday, 02 September 2007 at 05:18 PM

Dear Randy,

Yeah, oversampling is usually overkill.

Visually, we don't need perfect fidelity and I can't imagine folks willingly throwing away substantial resolution to get it. So, in cameras, we rely on fuzz-making Bayer filters and in scanners we live with the artifacts.

pax / Ctein

Posted by: Ctein | Sunday, 02 September 2007 at 05:22 PM

Dear Christer,

Sorry, they're physical targets I have. Printed by yours truly in the darkroom from a high-resolution glass plate years ago. (Yes, *wet* photography!) They don't exist in digital form.

pax / Ctein

Posted by: Ctein | Sunday, 02 September 2007 at 05:25 PM

Dear Chris,

Anti-aliasing filters work to limit bandwidth. They inevitably reduce sharpness below X lines for X pixels. That's what's important in my post-- you can't get 1 for 1.

Scanners don't have anti-aliasing filters. The photo was a realistic scan result (not a digital camera photo). The function of the photo is to make the problem clear, but it will really exist with any scan of non-bandwidth-limited material. You just won't readily observe it because we don't notice distortion of this type unless it occurs in a regular pattern.

Wow, someone figured out that if you stop your lens down too far the pictures get fuzzier!? I gotta write that down. Stop the presses!

It's got nothing to do with what I wrote about, though... unless that's how you want to limit your bandwith [grin].

pax / Ctein

Posted by: Ctein | Sunday, 02 September 2007 at 05:35 PM

i'm not sure it's worth trying again after your first response, but i'll give it one more shot.

i assumed, perhaps erroneously, that given your interests you would have already come across and so been familiar with nathan myhrvold's essay/trainwreck in response to charles johnson's description of how diffraction comes into play--or doesn't--in our digital cameras over on luminous landscape. the argument hinged on what role nyquist limits played in the system.

regardless, if your main point here is that you can't expect to resolve 'x lines for x pixels', then i am not sure you've made your point.

i think that because of the way image resolution (pixels) gets reconstructed (interpolated) from photosite data, which generally has been blurred first and sharpened later (and this all was what seems to have tripped up myhrvold), we do in fact commonly get x line pairs of resolution for 2x pixels. and in fact, in test images of non-straight line targets (and the preponderance of straight line targets for that matter), that is what i see. so for those of us using digital cameras, it would seem to me that your post is misleading--it suggests that we're getting substantially less resolution than we are actually getting. the resolution may have some errors in it, and for certain purposes (most of them having little to do with photography outside of scientific or surveillance purposes) that may be a problem, but mostly it's not.

yet, perhaps, we can both agree that it's nice to have more resolution when you want it.

Posted by: chris | Monday, 03 September 2007 at 05:52 AM

Ctein, a related observation I made some time ago: when rotating an image digitally by about one degree (something we do all the time), suprisingly strong and visible distortion can be introduced, depending on image content. I attributed this to the same phenomenon of sub-pixel displacements and rounding errors. The quite obvious solution I have found is to enlarge the image to, say, 2x2 the size, then rotate, then size it back. It seems to solve the problem. Maybe I'm describing something everyone knows already but hey, sometimes we can't help but reinvent the wheel.

Posted by: gbella | Monday, 03 September 2007 at 08:36 AM

Dear Chris,

OK, found the thread you're referring to. Yes, train wreck's a good description of the whole thing (not just Nathan's contribution).

Nyquist is only mentioned in passing. It's not central to their conversation.

I've got a lot of problems with BOTH of their articles. No, I'm not going to dissect them, technically. Not that interesting. Suffice to say there's a lot of technical "missing the forest for the trees" going on all around.

My essay was very limited in scope: it was explaining how most people fundamentally misunderstand the limits on sampling and fling "Nyquist" about like they know what they're talking about. That's where it starts, that's where it ends.

But, as for your statement that "we do in fact commonly get x line pairs of resolution for 2x pixels"... if you're talking about Bayer array cameras, that's flat-out wrong (not to mention impossible). No camera or digital back's ever been made that comes anywhere close to that. The very best I know of is a Kodak medium format back that would deliver 80% of that (1 lp/2.5 pixels). It was way off the curve; a good current Bayer array camera is around 65% (1 lp/3 pixels). The poorest are down around 55% (1 lp/4 pixels). But you have never, ever seen Bayer cameras deliver 1 lp/2 pixels and you never will.

This is not a Nyquist issue, by the way.

pax / Ctein

Posted by: Ctein | Monday, 03 September 2007 at 02:19 PM

ctein--

perhaps i am not using the terminology correctly.

i just took some photos of a dollar bill with a canon 5d+100mm macro. looking at the file at 400%, the bars in the eagle's shield are just under two pixels each bar. i have accurately defined lines (in the eagle's tailfeathers, for instance) that are one pixel across, and match pretty well the size of the original (ie, at that distance those faint lines in the tailfeather should in fact be about a pixel across).

are you saying that isn't what should happen, or am i misunderstanding you?

Posted by: chris | Tuesday, 04 September 2007 at 02:24 AM

[Forwarded from Ctein]

Dear Chris,

You commented: "...i have accurately defined lines (in the eagle's tailfeathers, for instance) that are one pixel across.."

OK, that's pretty interesting. But I think it's showing something different than you think it is. If I've correctly identified the lines you're referring to, these are isolated lines that are separated from adjacent ones by several line widths. In which case, your camera isn't *resolving* a single-pixel line, rather it's *detecting* a single-pixel line. You can detect much smaller features than you can resolve. Photographing a star field at night, for example. The stars are micropixels wide, at most. Our cameras can record the brightness difference between a pixel that contains a star and one that doesn't. But we don't resolve the star. Resolution is measured by how close you can get two stars (or lines) to each other and still see them separately.

Now, assuming I've correctly identified the feature you were talking about, I still think it's pretty interesting. It says that the algorithms in the camera that synthesize the full color photo from the Bayer array are really good at identifying and rendering edges. In other words, the acutance is excellent. But that's different from resolved detail.

pax / Ctein

Posted by: Mike | Wednesday, 05 September 2007 at 02:46 AM

Ctein,

Maybe you could add some of your expertise to this email I recently sent to some photo friends?

"Photo Guys,

I spent last week out photographing and was a bit worried that I shot so much with my Sony DSC-R1 at f/16. Today I did resolution tests on the Sony camera to see if I screwed up the week's work by shooting at f/16 so much. I was worried about diffraction making all the images a bit soft, like used to happen with my view camera lenses. To my shame and embarrassment, I never did do tests with the Sony to see which of the f/stops was the sharpest, so I was really gambling this week. Results of today's tests: all f/stops are equally sharp with no evidence of either diffraction at f/16 nor a fall off at the other end of f/2.8. Boy, was I relieved!

Also, as long as I had the chart out, I did comparisons for ultimate resolving power. I used the PLI lens test chart I've been using now for 30 years. For background, the worst lens/film combinations I've ever tested would only resolve 28 lines-per-mm; almost all lens/films would reach 40 lpm at their best, and occasionally I could even see a spectacular lens/film combo reach 56 lpm -- but somewhat rarely. I was surprised the first time I tested a digital camera (my old Fuji s602) and found that it resolved 75 lpm. I was so shocked that I ran the tests three times to triple check the math. (You photograph the chart at exactly 26 times the actual focal length of the lens to equalize the results for different lenses. Note ACTUAL focal length, not the phony "equivalent" focal length cited in digital cameras.) Later, I was thrilled to find that my new Fuji s7000 resolved 85 lpm.

Today my Sony tested at 100 lpm, the highest resolution I've ever measure in 30 years of testing. Not only that, but it had no chromatic distortion, no spherical distortion, and no coma distortion. It did have considerable curvilinear distortion at 24mm focal length, but that can be corrected in Photoshop easily enough. All in all, I was thrilled with the results.

So here is my theory -- about which I am on considerably shaky ground. Since digital cameras don't have the degradation introduced by the film's resolution, they seem to actually have a higher resolution that film cameras because they are only limited by the lens alone. Not being an engineer, I am not certain about this, but it is clear that -- for whatever the scientific reason -- that digital cameras seem to have better resolution of fine detail when compared directly to lens/film combinations. Makes sense when you think about the math -- 1/resolution-of-the-system = 1/resolution-of-the-lens + 1/resolution-of-the-film. If the film only resolves about 60 lpm, even with a lens that were to resolve 200 lpm the lens/film system would only resolve a little less than 60 lpm. My pet theory is that film lenses were never made for much better resolution because anything more than resolution of film would resolve would be wasted. Now that digital cameras don't have the same resolution limitations imposed by film, the lenses in digital cameras are made to much higher resolution standards. This would explain -- if my theory is right -- why old film lenses used on digital cameras give resolutions in the 50 lpm range (I've tested several) while the lenses manufactured specifically for use on digital cameras test so much higher -- at least in the 75-90 lpm range.

I'd love to have someone who is knowledgeable about both lens design and chip resolution explain all this with authority. All I know is that as a working photographer, I sure do appreciate the extra resolving power of these cameras and their ability to manifest detail. If any of you know someone you could pass this email on to who might be able to shed light on this, I'd appreciate it. I'd prefer to know, rather than continue to speculate from the position of uneducated observation!"

Ctein, as you can see, a lot of uneducated speculation here that may be way off the mark. But, I thought you might have some knowledge that would help me understand this.

BTW, in practical terms, this also manifests itself this way: With film, I always found that a 4x magnification was about the most I could go with the results my eye would accept -- e.g., a 16x20 from 4x5 film, a 9x13 from 6x9cm roll film, etc. A 3x enlargement was always pretty safe. A 5x enlargement was rare and required a great negative and the right subject. By comparison, I seem to get the equivalent quality of image from my Sony up to a 16x enlargement (a 21.5mm sensor makes a 13" wide print with ease). I can only non-scientifically explain this by assuming that the observed resolution increase from my test chart results along with some Photoshop sharpening allow for this increase in magnification ratio.

Your thoughts? I'd love to know if I'm even close in my layman's analysis of this.

I can send test chart image files, if you'd like.

Brooks

Posted by: Brooks Jensen | Wednesday, 05 September 2007 at 09:29 AM

Hi, Brooks!

I'm entirely willing to believe the digital cameras are resolving in the range of 80-100 lines per mm. I'm not sure that's a useful measure, the way it was with film, though. There are so many different-sized chips out there, and regardless of chip size, pixel count correlates better with resolution than anything else. For instance, today's 10-12 megapixel cameras all seem to resolve in the range of 2100 lines horizontal and vertical, give or take 10%, even though the sensor sizes vary over a factor of five.

But backing up a bit, there are some technical details you haven't got exactly right. First of all, sensor resolution does count, just as film resolution did. It's just a lot more difficult to measure directly, because what you're really looking at is a synthetic image constructed from an RGBG pixel array. The synthesis is not intuitive!

Also, the more accurate equation for resolution is not the sum of the reciprocals, but the sum of the square of the reciprocals. That is,

1/systemresolution^2 = 1/lensresolution^2 + 1/filmresolution^2 + 1/focusresolution^2 ...

I don't know why the reciprocal-sum equation keeps making the rounds; it's demonstrably incorrect for normal photographic systems.

Be that as it may, there are really three factors that primarily affect resolution (assuming you're working on a tripod), lens resolution, film/sensor resolution, and accuracy of focus. The latter should not be dismissed! In film cameras of all formats it was frequently the largest source of blur.

Film resolutions have improved with time. For films developed prior to 1980 (roughly) the the slower films had resolutions of 100-125 lp/mm. For films developed afterwards, 125-200 lp/mm. That's generally true for B&W or color, slide or negative.

Unfortunately I can't speak to large format lenses, having never tested them. The farthest I've gone is 6 by 7 cm format in my tests. So we're really operating in two different worlds. But I can tell you that decent 35 mm format lenses often show diffraction-limited performance at f/8, some of them at f/5.6. That corresponds to peak resolutions of 200-300 lp/mm. Medium format lenses aren't quite as good, but 200 lp/mm is not that uncommon at optimum aperture.

Putting those together, it would imply that 35 mm work should routinely hit well over 100 lp/mm and medium format work 90-100. What's usually the killer is accuracy of focus. Going back to the equation I gave above, imagine for 35 mm work that you've got film, lens, and focus accuracy all resolving 150 lp/mm. That only gets you about 80 lp/mm recorded on film at best! And note that the focus accuracy we're demanding is five times more stringent than the range typically given for depth the field.

Incidentally, best I ever got in a 35 mm film test was 160 lp/mm on film, honest to God measured. Imagine how perfectly everything had to be working to achieve that! Not something I was ever able to repeat.

Medium format is even worse; the difference between where you think you're focusing and where the film actually is can be tenths of a mm and it will vary from frame to frame. consider that if you're working at f/11 and your film is two tenths of a mm off of where it should be, the focus resolution itself is already down to 55 lp/mm.

Sheet film cameras are really bad. Not only is the film not held terribly flat, but you're focusing entirely by eyeball. Errors of a mm or more are not uncommon. There's a reason for stopping down to f 32!

OK, on to digital cameras. A lenses delivering diffraction-limited performance at f/16 can yield almost 100 lp/mm. For an electronically-focusing camera, I might assume very little focus error ... although that assumption may be entirely wrong! But let's make believe. Sensor resolution? I honestly don't know, but you're typically talking about pixels these days that are 6-8 microns in size, so they're definitely smaller than the blur circle of the lens.

So, yes, I can believe that with a really good camera you'd see very little drop off in resolution as you stop down.

In any case, I don't think the theory is terribly important. You can find lots of people on-line theorizing about resolution figures, diffraction limits, and all sorts of stuff like that. All the ones I've read have it substantially wrong (which is not to say that I have it right, either). The real proof of the pudding is what you're doing; running careful tests with your lenses and your camera to see exactly how it does perform, instead of depending on theoretical calculations of how it should perform.

pax / Ctein

Posted by: Ctein | Thursday, 06 September 2007 at 02:11 AM

"Sheet film cameras are really bad."

Ctein,

Do you remember when Howard Bond figured out a very simple way to measure his 8x10 film holders? Essentially, he placed one ruler across the holder where it seated at the edges--equivalent to where the ground glass was--and used another one to measure down to where the film was. And he ended up promptly throwing out half his film holders.

I remember another photographer who was doing long exposures at night with a 4x5 who would take five small bits of double-sided tape and stick them to the inside of his holder, gently insert the film, then press the film against the tape to hold it in place. His supposition was that during long exposures, the opened holder was exposing the film to humidity and the film was actually moving around during the exposures.

Wow....

Mike

Posted by: Mike | Thursday, 06 September 2007 at 04:15 AM

Dear Mike,

Yeah, I remember Howard's report. I don't recall if he gave actual runout numbers, do you?

I'd never thought about the problems of making long sheet film exposures, but I do believe it. I routinely work with 8x10 and larger sheet film in the darkroom for my dye transfer work, and glass carriers and vacuum easels are an absolute must. Take a sheet of film out of the box, lay it on a table, and it's likely to start curling in a few minutes. Not badly, but enough to throw off registration... or focus in a view camera.

Incidentally, it was Bob Shell who clued me into the bad runouts in 120 and 220 film cameras. He ran a bunch of tests and said it was really, really awful. He wouldn't tell me which models did better or worse, or give me precise numbers; I think he'd done it under contract and the results were owned by someone else. But he did say it was kind of amazing we got results as sharp as we did.

pax / Ctein

Posted by: Ctein | Thursday, 06 September 2007 at 09:40 PM

OMG. Audiophiles. NO! Please No! Go buy your five thousand dollar power cables elsewhere.

Posted by: Other MJ | Sunday, 21 December 2008 at 01:41 PM