By Ctein

This week I'm going to explain why RAW files aren't quite as raw as you think they are. This has been one of the most difficult of my columns to write. It all makes complete sense and is pretty simple...once you understand it. Getting to that understanding isn't easy: Mike, Oren Grad, and I had to go back and forth for several days before we finally grokked this stuff, and it was only due to the able guidance of the brilliant Eric Chan of Adobe that we ever got there.

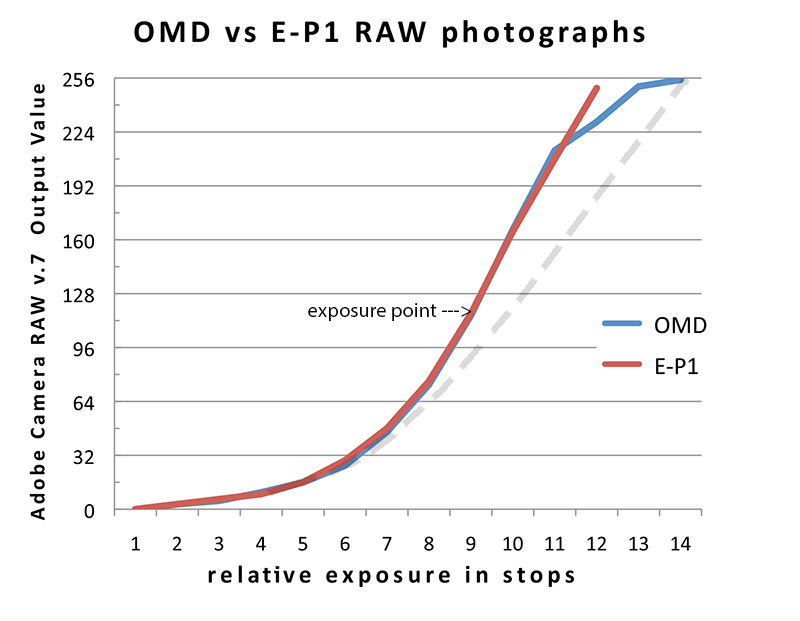

Fig. 1. Here are plots of exposure vs. converted values for my two Olympus

cameras. Not what you'd expect, if RAW conversions really were

straight interpretations of sensor behavior. You'd expect the OMD

curve to have the same overall shape, like the dashed gray line.

Fig. 1. Here are plots of exposure vs. converted values for my two Olympus

cameras. Not what you'd expect, if RAW conversions really were

straight interpretations of sensor behavior. You'd expect the OMD

curve to have the same overall shape, like the dashed gray line.

When I got my Olympus OMD, one of the first things I did was run a test to compare its exposure range to that of my Olympus Pen E-P1. Figure 1 shows a plot of my results (running both sets of RAW photographs through ACR version 7 using my default settings). I wasn't surprised that the OMD had a substantially longer exposure range; pretty much everyone had reported that. What surprised me was that the "characteristic curves" had very different shapes for the two cameras. Up through the middle-light grays, both cameras produced almost the same output values for the same exposures, but the E-P1 values shot straight up through 255, while the OMD showed a pronounced rolloff in the highlight contrast.

Well, that explains why so many people like the "look" of the OMD photographs; they have a more film-like characteristic curve, and the highlights are less likely to blow out unpleasantly. Even if a photograph did have blank areas in the highlights, the very low contrast in that part of the curve means that there wouldn't be these glaring holes in the middle of the composition; there would just be a gradual fade-out of detail.But, why the different curve shape? I thought RAW data was pretty much just what came out of the sensor (scaled to fit the number of bits in a RAW datum, of course). The sensors in the two cameras don't have differently shaped characteristic curves—they are pretty linear devices. So, why do the two RGB conversions appear so different? Shouldn't the OMD curve look more like the gray dashed line in the graph—just a stretched version of the E-P1 curve?

As they say in polite circles, WTF?

An absolute prerequisite for understanding what's going on is the column I wrote two weeks ago, "Why ISO Isn't ISO." Readers must take it as a given that camera manufacturers set camera ISOs to make what they consider the best-looking photograph. ISOs are not fixed by the sensor characteristics.

I've marked one point on the RAW characteristic curves of figure 1 as the "exposure point." This is the value you get out of the camera if you make an exposure of a uniformly lit surface; it's the camera's version of "average gray." In the case of both of my Olympus cameras, it's around 115 (it may be modestly different for different cameras). Whatever the camera maker decides is the proper ISO for their camera, that exposure still has to render as average grey or the picture will look too light or too dark.

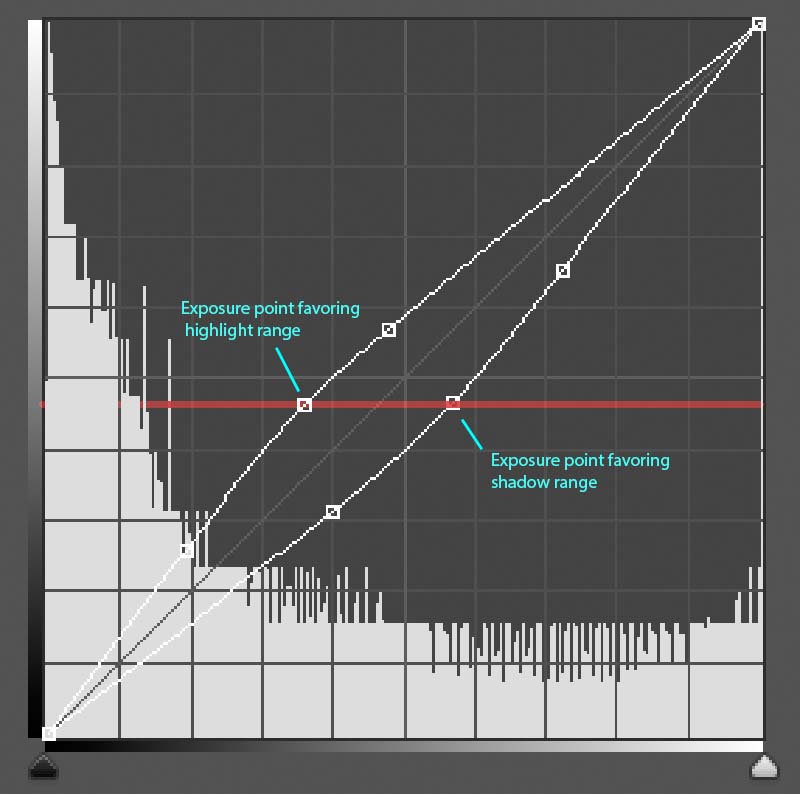

Fig. 2. A set of hypothetical gray scales, covering the range of a sensor

from no exposure on the left (black) to full saturation on the right

(white). Changing the ISO set-point changes the distribution of tones.

Fig. 2. A set of hypothetical gray scales, covering the range of a sensor

from no exposure on the left (black) to full saturation on the right

(white). Changing the ISO set-point changes the distribution of tones.

So, on to figure 2. The top gray scale represents the output for the entire exposure range of some sensor (that I made up), starting from no exposure on the left to an exposure that will blow out the highlights on the right. The second gray scale from the top shows that same range with a vertical black bar marking that nominal, average gray exposure point (value=115). If the camera maker wanted an output that looked just like the top gray scale in this figure, this is where they would set the ISO point.

Now, suppose the manufacturer wanted to favor highlight range, adding more headroom there to avoid blown out highlights. You'd get something like the third gray scale from the top, with the exposure/ISO point moved to the left (less exposure) . The thing is, if we don't change the rendering of those values, that photograph is going to look underexposed because that point won't be converted to average gray. To get the exposure to look correct, the tones in the gray scale need to be shifted, which I've done in this illustration. The shadow values open up more quickly and the intermediate values become lighter to ensure that the ISO exposure is rendered with a value of 115 . The total range is still from black to white, but the distribution of tones within that range has changed; the curve shape is different, with more contrast in the shadows and less in the highlights.

Conversely, if the manufacturer wanted to favor the shadows and sacrifice some highlight range, they might move the exposure higher (more exposure), as in the bottom gray scale in the figure. Again, an adjustment of tones is necessary to ensure that that ISO exposure point reproduces as a value of 115. Now, the midtones and highlights are darker than nominal instead of lighter, so the photograph won't look overexposed.

Fig. 3. The same information that's shown as gray scales in figure 2 is

shown here as curves. An ISO/exposure point that favors more

highlight range lightens tones overall, while one that favors more

range in the shadows darkens them, even though the average gray point

remains the same.

Fig. 3. The same information that's shown as gray scales in figure 2 is

shown here as curves. An ISO/exposure point that favors more

highlight range lightens tones overall, while one that favors more

range in the shadows darkens them, even though the average gray point

remains the same.

I hope you're all still with me. Figure 3 conveys the same information as figure 2; it's just that here I'm showing it as a curves plot in Photoshop. If you're more comfortable reading curves than gray scales, pay attention to figure 3. If curves give you a serious headache, you can skip this paragraph. The faint gray diagonal line would be the curve for the nominal exposure point. The upper curve shows how the tones get adjusted to favor an extended highlight range. The lower curve shows how they get adjusted to favor more shadow range. The horizontal red bar marks the value (115) of the average gray.

Remember, the choice of ISO is arbitrary; it's a decision made by the camera manufacturer, in order to produce what they think is the best looking photograph, as I explained two weeks back. Any of these ISO points would be a legit choice; no one is more "correct" than another.

The exact shape of these curves is not fixed. In fact, it can be pretty much anything you want, so long as those ISO exposure points stay locked to an output value of 115. Messing with the curve shapes will change the look of the photograph, the same way choosing different films with different characteristic curves will change the look of those photographs, but the overall exposure will still be correct. Remember this; I'll get back to it in a bit.

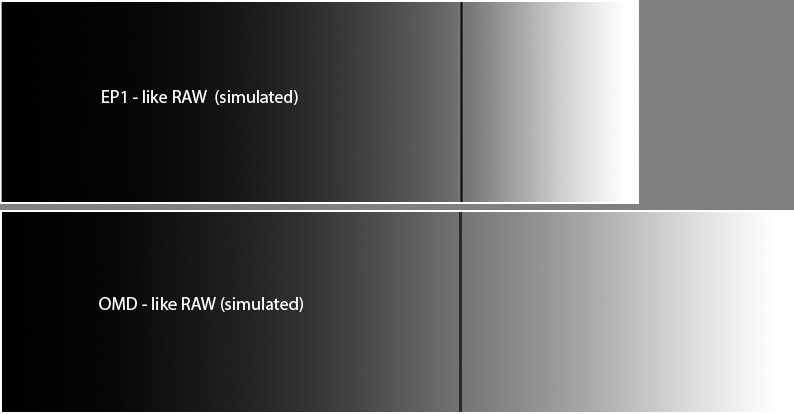

That's what happens when different cameras (or camera makers) settle on different ISOs for the same sensor. Now, what happens when the sensor changes, like in my OMD vs. my E-P1? That's simulated in figure 4. The OMD has a longer total exposure range, which corresponds to the greater width of the OMD gray scale. Up to the exposure point, both gray scales look very similar. Above that, the OMD scale is stretched out and has lower contrast to accommodate the greater exposure range between the ISO point and pure white.

A simulated comparison between the Olympus E-P1 and the OMD. The

OMD has several stops more exposure range (the longer grayscale); its

ISO settings give all that additional range to the highlights.

A simulated comparison between the Olympus E-P1 and the OMD. The

OMD has several stops more exposure range (the longer grayscale); its

ISO settings give all that additional range to the highlights.

Now, here's the import of all of this. Your RAW converter has to deal with this. It not only has to have that RAW data on how much exposure each pixel received, it has to know what the camera considers the correct ISO. Sometimes that information is there in metadata that the RAW software author can read (though not usually in the EXIF data); in some cases the author will need to make camera measurements directly. Regardless, a description of the camera's handling of ISO must get built into the RAW converter. The reason the RAW converter needs to know about this is because it needs to automatically make the kind of curves adjustment that's shown in figure 3. If it doesn't, a "normal" exposure won't yield a "normally-exposed" photograph.

In other words, the RAW converter has to be able to say to itself, "Aha, I know this particular exposure level is supposed to correspond to the average gray setpoint for this camera at this ISO, and I will adjust the curve shapes to ensure that it gets rendered correctly."

Now, exactly how it adjusts the curve shapes depends on the design of the converter. Looking back at figure 1, you can see that ACR doesn't do quite what I did in my simulations. It keeps the values tracking pretty much the same for both cameras until the light grays and then the OMD curve goes very flat. That's an arbitrary design decision in ACR; a different converter or a future version of ACR can decide to use a different characteristic curve, so long as it intersects the exposure point the same way.

All of this can be changed by the photographer, too. You can change the settings for the exposure slider and for the curves tool in ACR to render your RAW files with an entirely different characteristic curve shape. You're not locked into one particular characteristic curve. But that doesn't change the underlying fact—you can't give RAW photos from both cameras the same curve shape and have them both produce correct-looking exposures.

Think of it as being analogous to this: T-Max 400 and Tri-X have inherently different tonal renditions. You can change how you process the film and get different characteristic curves out of the two films, but you cannot make them look the same.

To sum up: Different cameras' RAW files cannot always be interpreted the same way, regardless of the RAW converter one uses, even if the cameras have identical sensors. RAW rendering isn't just determined by the black point and the white point of the sensor, it's also determined by the exposure point, and that is different for different ISO decisions. When cameras have different ISO or exposure points, then it's impossible for their RAW files to be rendered with the same characteristic curve and exposure. Just as different films are predisposed to different "looks," so are different cameras' RAW files.

Ctein

(Thanks

to Dave Polaschek, David Dyer-Bennet, Oren Grad, Carl Weese, Jeffrey

Goggin, Ken Tanaka, and especially Eric Chan for fact-checking this and

for their great suggestions on how to make it more comprehensible.)

Ctein's regular weekly column on TOP appears on Wednesdays.

Original contents copyright 2012 by

Michael C. Johnston and/or the bylined author. All Rights Reserved.

Links in this post may be to our affiliates; sales through affiliate

links may benefit this site.

A book of interest today:

(To see all the comments, click on the "Comments" link below.)

Featured Comments from:

Riley: "Shouldn't Wednesday come more than once a week?"

David Miller: "Bingo! I got it—and the first time through! Brilliantly written and a model of clarity: well done Ctein. Even better, it has practical application the next time I'm camera shopping, in helping me to understand the reviews and their implication for my particular style and preferences in photography. Thanks."

Mike Shimwell: "Good article Ctein, but I would take some issue with your title. :-) The issue you address is the interpretation of the RAW file (as you state throughout) not the 'rawness' of the data in the file.

"Adjusting the highlight curve to take advantage of the increased exposure range possible with newer sensors is a key way to obtaina more pleasing (film like) rendering. It's also an approach I've used with my digital cameras by deliberate 'under-exposure' to give more highlight headroom and then adjusting the midpoint and highlight curve in raw processing.

"One area that still interests me is how raw is the data in raw files. Accepting the processing chain from the voltage output from the photon counter through the amps, ADCs, etc., at what point does any noise reduction or other processing become 'intrusive'? A few years ago Canon RAW files were thought to be less tampered with than Nikon (re dark curent subtraction) and hence more useful for astrophotography. I wonder to what extent this remains the case and how the more modern senor/processing systems compare."

Ctein replies: Article titles are a funny thing. It's amazing how much discussion goes on behind the scenes about what they should be. Being exactly factually correct is, surprisingly, not at the top of the list. For example (though not in this case) Mike frequently rewrites my titles, which lean towards factual correctness, because either they won't draw a reader into reading the article or, more seriously, they don't include important keywords that will allow search engines to find them. That's a much bigger issue. Clever wordplay (well, what the author/editor imagines is clever—readers don't always agree) counts for a lot, too.

If folks look through all my old columns, they'll see that many of the titles are not literal. Sometimes they're not even close. The one about fitting a pint into a 12-ounce can had nothing to do with beverages. My column of two weeks ago had the title, "Why ISO Isn't ISO," which was not merely misleading but entirely false, because the article was about how ISO really is ISO. There are legitimately several different kinds of ISO. It was a catchy phrase that got people to read. This week's title is a riffoff of that one, and it's a lot more factual.

So, just lay back and enjoy. You can't take the titles too seriously.

As for me writing articles that dig even deeper than this one, describing what goes on between the moment a photon hits the sensor and data gets written into a RAW file. I'm probably not going to write those articles (though I always reserve the right to change my mind). The reason is that I don't think they'd be particularly relevant to the photographic experience. It's not that I'm not interested in that stuff, but I'm interested in it in a more abstract way. I might extract nuggets of practical information to include in articles, but, honestly, I don't think there's much there that improves one's photography.

Truth is, I wouldn't have even written this column if I hadn't butted up against this "different curve shape" thing, which does directly affect my photography.

I was pretty much that way on the film side of things, too. I mean, I can take the process all the way back to the fundamental quantum-mechanical level. Doesn't mean I'll write articles about that. Heck, I wasn't that interested in ordinary photochemistry. With no offense meant to David Jay and Tinsley Preston, who put out an excellent magazine, I never even read the original Darkroom Techniques (which was only half-jokingly referred to as "The Journal of Applied Sensitometry and Photochemistry"). I like knowing how things work, but I'm not a gearhead.

Don't get me wrong. I don't think there's anything wrong with having that deep knowledge; all knowledge is good. But I haven't come anywhere close to exhausting the knowledge I want to write about, and none of us have come anywhere close to learning everything useful we could about photography. What goes on behind the RAW file is, in my judgment, not very important knowledge.

While investigating the Nikon D70 i discovered the camera was quantizing the sensor output and Christian Buil discovered Nikon does some sort of nearest-neighbor dead-pixel elimination that renders Nikons all but useless for astrophotography.

Ideally RAW would be a straight dump of the sensor readout, along with some metadata like white balance sensor values, possibly with some lossless compression applied to keep sizes manageable. The reason RAW is not so-raw is marketing. Camera makers know reviewers look for noise performance in RAW rather than JPEG images and cook the books (pun intended) by applying undisclosed noise reduction before writing to allegedly RAW files. Some vendors are just more deceptive than others about this practice.

Posted by: Fazal Majid | Wednesday, 10 October 2012 at 12:41 AM

The only thing I don't get is the title. It's misleading, because one may think that the article is about manipulating raw files in-camera before they are written. That's what typical pixel-peeper would think in the first place ;-)

Posted by: Lukasz Kubica | Wednesday, 10 October 2012 at 01:16 AM

This discussion (and its related things) comes up pretty often on dpreview forums. Just search for posts by Iliah Borg or bobn2 and you'll learn more about raw files than you ever thought possible. Also, it would be great if Ctein could do an article on what exactly happens to the raw data before it gets written to the memory card (things like black-frame subtraction etc).

Posted by: Account Deleted | Wednesday, 10 October 2012 at 01:42 AM

More than a comment, I have a question.

We have seen the digital cameras undergo tremendous improvements over the last couple of decades, yet, there is still the fundamental weak point, which in my opinion differentiates digital from film output: the ability to record and render the highlights, particularly in B&W.

What is it, that has to change in the way a digital sensor records light, to make it able to reproduce perfectly a , say Tri X characteristic curve? Is it DR, bit depth of an image, or is it simply a question of tweaking the way RAW files are being recorded?

Thanks.

Marek

Posted by: Marek Fogiel | Wednesday, 10 October 2012 at 01:43 AM

And that refers only to tone distribution... a few days ago a long article on geometrical correction data embebbed in RAW files has been published in Spain by Valentin Sama (a well-respected expert in gear and technical aspects of photography, [i]a la[/i] Erwin Puts for Leica, only he's an Olympus guy), discussing its consequences on data quality and lenses usability and/or longevity. It has stirred quite a hot debate in our photography media.

Maybe Google translate can help to figure out what he says for the non-initiated in Spanish:

http://www.dslrmagazine.com/pruebas/pruebas-tecnicas/sobre-lo-crudo-y-lo-cocido.html

Posted by: Rodolfo Canet | Wednesday, 10 October 2012 at 01:55 AM

Fig.3 has a white-shaded histogram on the bottom, but it has an odd distribution. What is it?

Posted by: Allan Ostling | Wednesday, 10 October 2012 at 02:09 AM

I'm not getting something. I would have thought these two cameras had totally different sensors. The OM-D has a Sony sensor. So I would have expected the RAW data from each to have been very different.

Posted by: Mike Fewster | Wednesday, 10 October 2012 at 02:32 AM

I still don't get it: So the camera applies some sort of sensor gamma? And the RAW converter is supposed to linearize it again? (Just to apply another gamma for sRGB ;-).)

This would make sense if the bit depth of the RAW would be constraining, thus optimizing the luminance resolution. But it isn't. RAW values can be as fine-grained as desired, so a linear mapping of sensor response to RAW value seems to be most senseful to me.

Posted by: Torsten Bronger | Wednesday, 10 October 2012 at 03:31 AM

Let me see if i got this right: Raw converters have to treat raw data differently for different cameras to achieve similar results but in case of the OMD Adobe also made the aesthetic decision to change the defaults beyond that resulting in a different look of the images.

But doesn't this also mean the raw data is as raw as it gets for both cameras making the title and introduction a bit misleading?

Posted by: Chris | Wednesday, 10 October 2012 at 04:34 AM

How does this analysis compare with the DxO ISO measurements? Looking at the measurements for the E-P1 and E-M5, the difference in highlight headroom should be only 1/6-1/3 of a stop (unless you use ISO100 on the E-P1, in which case this increases by a stop).

This would suggest the difference is largely due to different ACR defaults - differences that are not just driven by the sensor characteristics. In other words, ACR could perhaps achieve a similar highlight response for the E-P1.

Am I missing something here?

DxO ISO comparison: http:// www.dxomark.com/index.php/Cameras/Compare-Camera-Sensors/Compare- cameras-side-by-side/(appareil1)/793%7C0/(brand)/Olympus/(appareil2)/ 612%7C0/(brand2)/Olympus

Posted by: Simon | Wednesday, 10 October 2012 at 04:53 AM

Ctein, you are still combining two elements, the camera's sensor, as exposed with the manufacturer's recommendations, and ACR 7, which may or may not provide a profile that finishes the task as the manufacturer suggests. I have heard in the past that the manufacturer's advice to third party software developers (even the mighty Adobe) comes late and is often incomplete. It may not consist of more than black point, white point, and "exposure point" (a nice coinage) for a new model, plus color test cases, of course. So what can be said about the inherent capabilities of a new camera without having to factor in the software, which might be Adobe's, Phase One's., Apple's or ...?

scott

Posted by: scott kirkpatrick | Wednesday, 10 October 2012 at 05:16 AM

Dear Ctein,

If I understood this column (and the previous one on ISO) correctly, I get the following:

a) The way Raw is rendered is *pre-cooked* by the camera ISO.

b) Camera ISO differs from camera to camera even if they use the same sensor or are made by the same camera manufacurer.

c) Camera makers provide APIs or what not to third party software developers so that their camera's Raw files can be rendered "appropriately." Otherwise, they provide their own proprietary Raw converters (to insure that the rendering of their camera's Raw files is not *overcooked*?).

d) The user can manipulate the Raw files of his camera *to taste* but within the limits set by the camera ISO. For example, the user can't expect the prints from pictures taken by different cameras he owns to look the same (in furtherance of a "style") even if he used the same "curves" in post-processing.

If all of the above is correct, (1) What then is the advantage of shooting Raw over JPEGs, if both are essentially the same banana (or should I say MRE)?

2) Do film photographers enjoy more degrees of freedom (assuming the availability of high speed film) than digital camera users? Are the speed limits of film as constricting as camera ISO in regards to developing a certain "look"?

3) Is "115 average gray" the same value as that of "accessory gray cards" being sold to manually set white balance correctly?

Last question (slightly off-topic), (4) Is there a rule of thumb for shooting (with a tripod) in available darkness equivalent to the "Sunny 16" rule?

Obligado.

Posted by: Sarge | Wednesday, 10 October 2012 at 05:34 AM

Thanks Ctein and all involved - this is a great article, which concisely puts across a complicated subject.

When I first started shooting RAW, I was certainly surprised to find such big differences between the RAW conversion from manufacturer's software versus Third Party A and Third Party B.

Bibble Labs (back when they existed) used to explain that it was due in part to differences in each company's calibration techniques - the targets and lighting used can make different cameras give different results because even if some componenets are identical, the software on it might have a different preference for exposure (for example). Kind of as you've explained here.

Because of that some targets and lighting rigs will be better than others, or just be biased towards a look you prefer. It boils down to "they're all a bit different, so you pays your money and takes your choice".

There are of course other differences between the RAW software that also affect the end result. But you shoud've seen how many people wouldn't accept that RAW wasn't quite RAW when it came to supporting their new shiny camera - "it has the same sensor, why not just say it's a model XYZ?".

It's great to see a simple and readable article about this with decent visual aids in it - I'm sure that this will be linked to thousands of times in its life, mostly by support staff in forums trying to explain why the Nikon D770000 isn't going to work out of the box on day one, despite having the same sensor as the D700000...

Mike - perhaps track the inbound links to this page, and invoice companies quarterly? ;-)

Posted by: Philip Storry | Wednesday, 10 October 2012 at 07:20 AM

I'm still confused. The RAW file is what comes off the sensor's analogue-to-digital converters, right? You're not saying that the sensor data is being massaged by software in-camera? I think what you're discussing might be the fact that the digital response of the ADCs to the analogue signal can be engineered to be relatively linear or non-linear, just as the responses of the pixels are engineered to be relatively linear or non-linear to the numbers of photons at different frequencies, within the constraints presented by the materials and processes that are used for present-day fabrication.

I don't see where you establish that "Different cameras' RAW files cannot always be interpreted the same way ... even if the cameras have identical sensors." The ADCs are on the sensor, so are you saying that there are significant manufacturing variations between sensors of the same design and from the same factory? I believe that Sony introduce hardware modifications of on-chip support circuitry for clients who buy sensors in large enough quantities, which might include the ADCs, so perhaps that's what you mean? The only other variation would be in the optical path, although the attenuation effects of lenses and filters are relatively linear, AFAIK, except as a function of position --but is that what you mean? It's somewhat unclear whether by "different cameras" you mean different examples of a given type of camera, or different types of camera, though I think you mean the latter.

Like I say, I'm still confused, so I expect my questions will be better put by someone else. Nonetheless, I think the overall flow seems pretty clear, from outside world, through the optical path, conversion to analogue, conversion to digital, storage (almost always neutral), RAW converter, then, perhaps the most complex conversion, of which you have said much here in the past, to printer or screen.

Posted by: Peter Morgan | Wednesday, 10 October 2012 at 07:37 AM

I always assumed that different sensors in different sensors gave different results, even at the RAW level. And isn't that why RAW converters have to be updated with every new model of camera even though file formats stay the same (.NEF for example), to account for those tweaks/differences?

Posted by: Jakub | Wednesday, 10 October 2012 at 07:51 AM

So, does ACR choose a good "characteristic" tone curve for a RAW or is this something a "good" photographer needs to examine and tweak? Does ACR try to balance things out across different RAWs to achieve a consistent "Adobe ACR" look?

I guess the same story applies to colour rendition as well.

The take-home message is that digital sensors, RAW files and converters are not neutral: each camera manufacturer and RAW converter developer adds their secret sauce.

My gripe with RAW is that camera manfacturers persist with their proprietary formats ... DNG doesn't seem to be getting any traction.

Posted by: Sven W | Wednesday, 10 October 2012 at 08:23 AM

Ctien, thanks for digging into this. But I need some clarification. When I started reading the article, I thought you were saying that the difference in the two curves in Fig 1 was the result of an innate characteristic of the Raw files (i.e., something baked in by Olympus) but in fact, it is the result of the application of ACR's profile for each of the cameras, using tone mapping specific to that camera, is it not? A less sophisticated converter might render each file completely linearly.

Also, in the later figures (re favoring highlights or shadows), don't those reflect different tone-mapping applied by the manufacturer to the linear sensor response during the in-camera conversion to Jpeg rather some characteristic of the raw file itself?

Thanks again for a nice wonkish article. :)

Posted by: Alan Fairley | Wednesday, 10 October 2012 at 09:12 AM

This would explain why so many photographers keep older cameras with lower MP and lower DR... they always say they like the output. Even I am keeping my EP2 for its "look" even though I have an OMD.

Posted by: Abraham | Wednesday, 10 October 2012 at 09:25 AM

Seems to me, the title should be ACR RAW is not Raw. And I would agree.

What does the RAW data from your OMD look like, vs the RAW data from your E-P1? Is Olympus's firmware applying curves to the sensor's raw data prior to writing that data out to a RAW file?

Posted by: Michael Litscher | Wednesday, 10 October 2012 at 09:51 AM

Canon's highlight tone priority does a similar thing, it lowers the exposure, but boosts the tone curve. Curiously, the raw data represents the lowered exposure, and Canon's DPP boosts the tone curve to make things look OK.

Apple's Aperture, can not (or ignores) the HTP setting, so when you shoot raw with HTP turned on, Aperture's raw files come out under-exposed.

Posted by: KeithB | Wednesday, 10 October 2012 at 10:08 AM

Ctein:

Thanks for this column. It is extremely useful but starts raising other questions:

1) Given the examples above it would seem that the "Expose To The Right" (ETTR)school of thought is placing more of the image on the top shoulder of the curve. In the case of the E-PL1 this seems to imply trying to wedge more of the data into a smaller array of date points. This should lead to a lower quality image yet the ETTR folks claim the opposite. Is the significance of ETTR sensor dependent? Is it possible for ETTR to work well with some sensors and deliver poor results with other sensors?

2) The data appears to imply that the decision of which RAW converter to use may be as significant as the choice of camera/sensor combination. Or is it true that any competent RAW converter can provide results similar to any other converter if the user modifies the curve to deliver images of equal quality?

Thanks in advance.

Cheers!

Posted by: fjf | Wednesday, 10 October 2012 at 10:15 AM

I feel a headache coming on....

Posted by: Michael Steinbach | Wednesday, 10 October 2012 at 10:51 AM

Seems that the film "Zonies" of yesterday are the "Rawies" of today. Just show me the image!

Posted by: richard Rodgers | Wednesday, 10 October 2012 at 11:42 AM

Good article Ctein, but it has me thinking: there's more to it than that isn't there? For starters each R / G / B channel would respond slightly differently per camera -- so I'm wondering if that balancing happens during the dump to the RAW file or during the RAW tone mapping you talk about.

I'm personally hoping it happens in ACR during import! But now it also explains why I would want to do a tone mapping by channel.

Good article, makes me want to go out there and do some experiments ...

Pak

Posted by: Pak-Ming Wan | Wednesday, 10 October 2012 at 12:06 PM

As I understand it , DNG is a file format not a data format.

The DNG file contains some meta-information about the image and optionally some post processing instructions as well as a description of how the raw un-demosaiced data is laid out. The raw un-demosaiced data itself is camera or at least sensor specific.

Given this , calling DNG files "non-proprietary" or "standard" and suggesting that if a camera maker could "just output DNG files" it would solve all problems with reading them is missing the point of DNG.

It's more like the TIFF standard which doesn't even specify the byte order , bit depth, or encoding than the JPEG standard which gaur enters that any jpeg reader can read any decode any jpeg file.

The non-standard ness of the DNG format is a good thing because it allows for new unimagined sensor technology ( although they seem to take their time with some non-bayer patterns ).

Of course I could be wrong, I read the documentation years ago and thought it reminded me of the old Amiga IFF standard, said "cool" and started using it.

Posted by: Hugh Crawford | Wednesday, 10 October 2012 at 12:17 PM

I've said it before and I'll say it again: when Ctein talks like this, it just makes my head hurt. I know I will never have to contemplate stuff like this hard and long because he will do it for me. Bless him.

Posted by: Rick Wilcox | Wednesday, 10 October 2012 at 12:30 PM

I think your article is quite clear. However It is specific to the Raw interpretation of Adobe Raw. Each raw converter does have its own interpretation of the Raw file. This doesn't mean that RAW is not RAW. IT still is. But you can't see the raw data as it needs to be read. I think its that simple. So depending on how the raw data is interpreted it will produce vastly different results. Its up to the photographer to adjust the reading to what "looks" the best.

I also fully disagree with you on that a file produced by camera x and camera y can not be made to look the same. Yes they can! Maybe that is not what you meant to say, but thats how I interpreted. You can easily manipulate the sliders, and curves so that the images out of camera X and Y look the same. If what you wanted to say was that you can not use the same settings on camera x and Y to get the same looking image, then I agree. I don't even think you can use the same settings on the same camera x with different version numbers. As there will be difference as noted by many users whom share their adobe presents for a specific camera model to other users with the same camera model.

Posted by: David Bateman | Wednesday, 10 October 2012 at 12:52 PM

Thanks. Worth waiting for...

Posted by: Jay Tunkel | Wednesday, 10 October 2012 at 01:34 PM

I think when Olympus first started playing with the exposure point, what some people really wanted was to be able to change that back and forth in the camera from a more traditional curve to the new one as desired. The only way to get the more linear, less highlight friendly curve was to shoot iso 100, which they have removed from recent cameras. My E5 has iso 100, which they call "low noise priority," and iso 200 is called "recommended," and dxo measures both as around 120 iso.

It's interesting to open an OMD file in Raw Therapee, which doesn't seem to respect the desired exposure point. Provides a rather dim image, and seems to be a source of a lot on angst with the "Olympus is lying to us" folks. I wonder, is the exposure point information carried in the raw file, or is it something Adobe and others figure out at by looking at jpegs, and then put it in the camera profile?

Posted by: John Krumm | Wednesday, 10 October 2012 at 02:31 PM

JPEG for President!

Posted by: Bill Mitchell | Wednesday, 10 October 2012 at 03:04 PM

Seems like nothing you wrote really says raw data isn't raw data (I still believe some raw files are more raw than others even though you didn't write that). You are just saying that different cameras require different interpretation of the raw data when displaying the photograph or converting to a jpeg or tiff.

I think all you are really doing is busting the myth that if all cameras produced the same raw file format (i.e. DNG) that good raw support for new cameras would be automatic (recently taken to the absurd extreme that DNG output from Sigma's Foveon cameras would magically allow automatic support in Lightroom).

Posted by: John Sparks | Wednesday, 10 October 2012 at 03:46 PM

Dear Marek,

It's something of all three–– really, when you're up in the 13-stop exposure range, there's enough there to nicely emulate a Tri-X curve (folks who think otherwise should actually look at characteristic curve plots for films). But a lot of this lies in where the ISO point gets set and how you adjust your curves. Many of the folks today who are complaining about lack of headroom in digital files compared to film are really demanding more from the digital files than they ever did from film. They want more shadow range with less noise than they ever got out of Tri-X, at the same time that they want the extended highlight range.

It's entirely fair to want that, just understand that it's demanding more from the digital file than you got from film.

~~~~

Dear Allan,

Oh, that's just the histogram for figure 2. I generated figure 3 by taking screenshots of the Photoshop curve layers I used to create the different gray scales in figure 2. You can ignore it; it's not telling you anything interesting.

~~~~

Dear Mike,

Sensor manufacturer is utterly irrelevant, but you're right that different sensors are involved. But, here's the thing. All Bayer array sensors are pretty linear devices–– for every X photons they deliver Y photoelectrons up to the point where they saturate. RAW files are pretty much linear mappings of that (theoretically they don't have to be, but it makes RGB conversion immensely simpler). So let's say that some sensor saturates at 100,000 electrons and the camera makes 10-bit RAW files, that is, there's about 1000 values possible for each RAW datum. So, 25,000 electrons is going to become a RAW value of about 250, 50,000 electrons a value of 500, etc.

Whatever the exposure range and dynamic range of the sensor, it's still getting mapped that same way. Pure black gets a RAW value of zero, maximum white gets the maximum RAW value, and everything in between gets mapped more or less linearly. If you have two sensors with two different exposure ranges, one's image's “characteristic curve” will be stretched out compared to the other's, but you'd still expect them to have the same shape, like the dashed line in figure 1. The fact that they don't tells you there's more going on with RAW than just this linear data.

pax \ Ctein

[ Please excuse any word-salad. MacSpeech in training! ]

======================================

-- Ctein's Online Gallery http://ctein.com

-- Digital Restorations http://photo-repair.com

======================================

Posted by: ctein | Wednesday, 10 October 2012 at 03:47 PM

To add to what others have said above, m4/3 lenses tend to have relatively massive distortion corrected at the raw level. Many of the lenses for the system are actually wider than indicated on the lens barrel, but the camera corrects the distortion and crops, which gives a field of view as indicated on the lens barrel.

Posted by: GH | Wednesday, 10 October 2012 at 04:07 PM

Dear Peter,

Please go back and reread my ISO article. This isn't about hardware differences in the chips. This is about the different ways camera manufacturer's legitimately use that hardware. ISO settings are NOT specifically hardware-derived (only influenced). Different ISO settings demand different tonal interpretations and different curve shapes or the overall exposure will not look correct.

~~~~

Dear Sarge,

1) A whole bunch of stuff gets permanently baked into a JPEG that isn't baked into a RAW file. Even this ISO/exposure point isn't actually baked into the RAW, it's just meta-information that advises the RAW converter on how to make the most desirable interpretation. But you, and the converter, are free to ignore it.

JPEG fixes the curve shape, the exposure value, the color temperature, and the amount of sharpening and noise reduction. It also gives you only 8-bit color instead of 24-bit color, which limits how much manipulation you can do later without seeing artifacts. Equally seriously, for me anyway, is that the standard JPEG curve in a camera throws away a couple of stops of exposure range that exists in the RAW file.

2) I would say that film photographers enjoy fewer degrees of freedom. That isn't a good or a bad thing. It just is.

3) These days I have no idea how digital camera makers are doing their metering, but the ISO standard for metering at least used to be that the reference point was a 13% equivalent reflectance. Most gray cards that are sold are 18%, a half stop lighter which is why many film photographers erroneously concluded that the film makers were overrating their films. See POST EXPOSURE (free from my website) for much more about this. I'm not going to expand upon it here. If that material raises more questions for you than it answers, e-mail me privately.

4) With digital cameras, about 1 second at f/2 at ISO 400 records about everything you can see with the naked eye.

pax \ Ctein

[ Please excuse any word-salad. MacSpeech in training! ]

======================================

-- Ctein's Online Gallery http://ctein.com

-- Digital Restorations http://photo-repair.com

======================================

Posted by: ctein | Wednesday, 10 October 2012 at 04:21 PM

Dear Sven, Alan and John K,

This “innateness” business is a little subtle. The ISO/exposure point isn't baked into the RAW file's data (it is in any JPEG the camera creates), but it's a necessary piece of metadata if you want to get a correct interpretation of that RAW data. And, frankly, we don't really give a damn what the RAW data looks like. We don't view RAW data; we only view how it gets interpreted as an RGB image.

According to Eric, that meta-information can get conveyed in any of several ways. It may be in metadata that is accessible to the author of the RAW converter (though not in the EXIF file), it may be in technical information that is conveyed by the camera manufacturer to the author, or the author may have to figure it out themself by running tests. And, as John points out, the RAW converter doesn't even have to honor that information.

But if the converter doesn't, then any photograph made using an ISO/exposure point that deviates from the linear one will open up as being too light or too dark. In which case, the end-user will likely create a customized set of exposure and curves adjustments for that converter and apply them to make the image look “normal.” In other words, the end-user manually does the same sort of thing an ISO-savvy RAW converter does automatically.

In my later figures that demonstrate favoring highlights or shadows, they also represent adjustments that the RAW converter *MUST* make in some fashion, or the RGB conversions will be too light or too dark.

In other words, from the technical point of view the ISO is not baked into the RAW, it is merely advisory. But aesthetically, it's mandatory.

~~~~~~

Dear fjf,

We are not going to discuss ETTR. You can read my opinion of it here:

here

Yes, as I've mentioned in a previous comment, different RAW converters do all sorts of different things. There's far more than just curve shapes involved.

pax \ Ctein

[ Please excuse any word-salad. MacSpeech in training! ]

======================================

-- Ctein's Online Gallery http://ctein.com

-- Digital Restorations http://photo-repair.com

======================================

Posted by: ctein | Wednesday, 10 October 2012 at 04:56 PM

+1 for the post by GH. My big concern about pre-RAW tinkering is not if the camera manufacturer is trying to squelch a few defective pixels or smooth some unsightly noise. It's when the manufacturer is trying to manipulate lens performance, e.g. messing with light fall-off at the corners or correcting problematic distortion effects in order to bring the consumer what appears to be a much better lens performance than is really achieved. When this pre-raw image editing is done the resulting "RAW" data being written to the memory card is having some of it's inherent goodness taken away to compensate for less than optimal lens quality. Aargh!

Also, a question for Ctein and/or other experts. Anyone know if camera companies (Fuji comes to mind) specify different spectral transmission properties for the RGB filters placed of in front of the silicon detector? If, for example, the filter properties are tweaked to camera company specs, then the same underlying sensor response could yield very different characteristics when doing the RAW conversion in RAW convertor software. In other words, is a 16 MP Sony sensor in a Nikon 7000 necessarily the same as one that could end up in a Fuji or Pentax camera? I'm curious whether the camera manufacturers who rely on other chip fabricators have some custom input into the final chip they receive notwithstanding other circuitry they add elsewhere in the camera?

cheers,

Mark

Posted by: MHMG | Wednesday, 10 October 2012 at 05:03 PM

looks like we are back to "blaming the camera". When we shot film, "real photographers" developed and printed their pictures. Everyone else, including photolabs, shrugged and said it's the camera's fault, or the user's.

Digital cameras have replaced film. But there is no substitute to composing and printing your own work. It's called "post-processing" these days, how many people spend 10 hours working on a picture, just like we used to do in the darkroom, going through sheets of paper just working on a single image?

Posted by: ben ng | Wednesday, 10 October 2012 at 05:39 PM

Mark and GH, I'm pretty sure that m43 raws do not arrive already lens corrected, but the lenses send the correction info with the raw and the converter handles it. But you can't undo it, since that's how the cameras are designed to work. DPR has written on it. There are programs that will open the raws without the corrections if you want to see the difference (I think the site Lenstip does this for comparison). And of course they don't correct non-m43 lenses attached via adapter.

Posted by: John Krumm | Wednesday, 10 October 2012 at 05:41 PM

Ben,

I don't see how anyone is "blaming the camera" or even speaking of doing so. Ctein's not even offering value judgements; he's just trying to describe one aspect of what happens in the chain, that's all. No "blame" to be found.

Mike

Posted by: Mike Johnston | Wednesday, 10 October 2012 at 06:04 PM

We beat this whole raw format idea to death during the beta testing of LR4....some very!! insightful info from Eric et al...really required reading to get a sound footing in this matter of raw data.

http://forums.adobe.com/thread/946966?start=0&tstart=0

http://forums.adobe.com/thread/948951?tstart=0#948951

In regards to the JPEG matter of having only 8bits, Sigma with their foveon x3 sensor offers a high quality setting in the YCbCr color space with a sampling rate of 4:4:4 yielding 12 bits of data for the 4 pixels.This 444 sampling is drooled over in the video editing world as it allows high latitudes for editing and grading footage...with the exception of the Red camera everything is JPEG !! So why this aversion of shooting jpeg for still photogs? Hell we never had this raw editing power shooting film,,really was nothing more than bracketing...::))

Posted by: DenCoyle | Wednesday, 10 October 2012 at 06:37 PM

Dear Mark,

I don't believe aberration corrections are being applied before RAW data gets written. Some RAW converters can correct for chromatic and geometric aberrations based on metadata or information they've received from the manufacturers, but it's not forced by the RAW file. In fact, there are RAW converters out there that will happily ignore this information, and they are useful diagnostic tools for figuring out just what's going on optically. That's how I determined that the smearing I objected to in the corners of the Olympus 12 mm f/2 lens was due to correcting for **excessive** barrel distortion, rather than due to astigmatism or coma.

Lens design is a compromise, a trade-off between the different aberrations. For a given size, weight, and price of lens, if you want, say, less geometric distortion, you'll pay for it with an increase in other aberrations. Software correction is just one more tool in the arsenal and it is no more inherently objectionable than, say, aspheric or diffractive lens elements.

Many lenses that are software-corrected for geometric distortion produce exquisite image quality from corner to corner. Objecting to software correction of aberrations is simply a prejudice.

I don't know the answer to your filter question.

pax \ Ctein

[ Please excuse any word-salad. MacSpeech in training! ]

======================================

-- Ctein's Online Gallery http://ctein.com

-- Digital Restorations http://photo-repair.com

======================================

Posted by: ctein | Wednesday, 10 October 2012 at 06:48 PM

"This 444 sampling is drooled over in the video editing world as it allows high latitudes for editing and grading footage...with the exception of the Red camera everything is JPEG !! So why this aversion of shooting jpeg for still photogs?"

DenCoyle,

Still photography has always had to look better than moving photography, at every stage. The reason's pretty simple...you can stop and study a still frame but not a moving one (well, you can stop the video NOW, but you know what I mean--it's not intended to be looked at that way).

I don't think there's been any time when standard/conventional/average still photography wasn't of better quality than the moving pictures of the same era.

(And of course a lot of still photographers aren't actually averse to JPEG.)

Mike

Posted by: Mike Johnston | Wednesday, 10 October 2012 at 07:18 PM

"...trying to wedge more of the data into a smaller array of date [sic] points. This should lead to a lower quality image..."

fjf - I'll take a shot an addressing your ETTR question, since Ctein won't, and I slightly disagree with his ETTR article*.

The reason ETTR can help and image, contrary to some information on quite a few internet sites, has nothing really to do with how many "levels" exist in the data. ETTR simply increases the signal to noise ratio (in the loosest sense of that term) by overexposing as much as possible WITHOUT going so far that any highlights get blown.

Simply put, the bright highlights are the lowest noise part of any image, and the dark shadows are the noisiest part. Move the exposure away from the shadows and toward the highlights and you'll minimize noise in the image. Bring it back down to the "correct" exposure in software and voila - less noise than you would have had otherwise.

The number of levels is not the reason that works.

*Ctein's article posits that ETTR is useless because it's unnecessary with current equipment; And that you'll probably ruin more shots than you help. I would argue that I don't care if it's necessary or difficult as long as it can possibly help. If the scene is low contrast enough, you can still minimize noise by exposing as far to the right as possible so that highlights aren't blown at all.

Posted by: David Bostedo | Wednesday, 10 October 2012 at 07:40 PM

Ctein, in your article you pose the question: "Why the different curve shape?"

Perhaps the answer lies in this explanation about a feature of some professional Sony video cameras:

http://www.cinematography.net/Files/Panavision/Sony/shootingTips05.pdf

Posted by: Craig Norris | Wednesday, 10 October 2012 at 08:21 PM

Mark - I've seen measurements and publishing of some sensors spectral response based on their RGB filters (For instance this one from Maxmax)

And I have no idea how much, if at all, raw converters know about this or take it into account.

Posted by: David Bostedo | Wednesday, 10 October 2012 at 08:58 PM

To MHMG:

Yes, the color filter arrays used by different camera manufacturers are different, and the "same" sensor used by different manufacturers will not necessarily have the same color response.

RAW converters need camera-specific information about the color response to be able to "unpack" the Bayer matrix and create an image with R, G and B values at every pixel. Different converters interpret this information in different ways, and can produce different color renditions. My understanding is that this information is not provided to outside software developers by the manufacturers; converter writers have to figure out the various coefficients from their own tests.

Another important point is that R, G and B color response is not "clean" - there is considerable overlap in the spectral ranges to which the nominally single-color elements respond. This affects the camera's ability to differentiate between subtly different colors, and is reflected in the coefficients that the RAW converter uses to unpack the Bayer matrix. DxOMark reports information on this under the "Color Response" tab in its camera reviews.

Posted by: Oren Grad | Wednesday, 10 October 2012 at 10:05 PM

The title of the other article should have been, "Why ISO isn't iso" - that is, "iso" being Latin for "same."

Posted by: Clay Olmstead | Wednesday, 10 October 2012 at 10:35 PM

You're right. But it's still better then a jpg.

Posted by: Nathan | Thursday, 11 October 2012 at 12:05 AM

Playing with RawPhotoProcessor, especially using it for RAW files of different cameras really helps to understand these & couple of other things like gamma & how WB works.

Unlike in ACR you'll see dashed line equivalent on Fig. 1 for OM-D by default & then can adjust default processing if you want something like blue curve.

Even if in the end you'll use something else for your work - it's a great learning tool.

Posted by: Doroga | Thursday, 11 October 2012 at 05:12 AM

There's nothing too surprising in there though Olympus ORF files are cooked even more than you might realise. Early raw converters for the E-30, for example rendered images exposed at ISO200+ very dark, probably because the images are actually ISO100 images underexposed by a stop and then pushed (but you wouldn't see this in the raw unless the converter knows to do it). This protects highlights but also means noisier shadows on the old 12MP sensors.

I often bracket and ETTR with my Pen and it often pays off, even at times of the day when I wouldn't care to be taking photos with other cameras I've owned.

Posted by: Jason Hindle | Thursday, 11 October 2012 at 07:18 AM

This was really quite readable and makes a lot of sense. One thing I wonder, though, is there any way to know, without being able to actually test the camera, where its sensor might fall on such a scale? I mean is there some kind of spec that could be mentioned along with all the other "stats" that come with a review/tech info?

Posted by: Mike | Thursday, 11 October 2012 at 07:40 AM

Ctein, do the same sorts of gyrations happen with white balance in RAW?

Posted by: Steven Willard | Thursday, 11 October 2012 at 10:04 AM

To rephrase Ctein's finding the root of this problem for ACR is Adobe have always used the same curve and mid-tone for all camera raw images imported into Photoshop with ACR. The issue with the OMD (and other cameras?) is that this earlier design choice of curve/midtone point now leaves insufficient headroom for new sensors with extended dynamic range from midtone grey to saturation. They work around the problem by modifying the raw demosiaiced data with a characteristic curve so that it rolls off into a shoulder and so "fits" into the "expected" gamma corrected curve in the "brights" and below.

To me that sounds like a "legacy issue" (i.e. a design bug) for ACR/Photoshop who made a design choice some time ago to accommodate users expecting midtones at (or around) a particular corrected level on the same corrected curve.

This is not an issue with other raw convertors that don't carry Photoshop's legacy expectations.

Other raw convertors that don't take this approach (like Aperture and Lightroom) just use a 16 bit (more more) internal representation for the 10/12/14/16 bit raw data. They don't have a problem with higher dynamic range sensors because they put the available bits from the sensor in top n bits of their integer (or float) and zero the lower bits. They have as a parameter the standard "exposure point" for that given camera. Using that data they can show a gamma corrected histogram with midtone gray at 50% with the default settings for each camera.

The other raw convertors are not gong to have this problem until sensors exceed 16 bits of dynamic range. But it is interesting that Adobe have added floating point representations to the DNG 1.4 specification. Though that feature is called "High Dynamic Range" they clearly don't just have "wackily toned" images in mind. They're thinking about the future.

So, yes, the headline of the article is poorly chosen (as others have commented) and confused me for a while.

A related point Olympus cameras (at least my E-PL1) has an "EXPOSURE SHIFT" control (in the cog-J Utilities menu) that allows one to add additional "exposure compensation" for each of the metering types (evaluative, center-weighted and spot). This is equivalent to shifting the "exposure point" or "moving the ISO" about on the characteristic curve. It's a very useful feature that's not present in some of my other cameras with "too hot" metering.

@MHMG: Yes, camera companies do have input into the dyes used in especially for the top of the line cameras. It's one way of defining the "look" of the image (for color think of Nikon vs Canon vs Fuji). They can buy an "off the shelf" sensor or they can pay more and have one OEMed in which the "toppings" (e.g. microlens and other optics, CFA dyes and more recently PDAF pixel masking and special CFA layouts) on the sensor can be specified. It seems a lot of Nikon's recent sensors (Sony: D800/D600/D7000 and Aptina: Nikon 1) are another companies "base" sensor with Nikon additions in the "toppings". With those collaborations it becomes unclear "whose" sensor it is though Nikon claims, vaguely, they're "Nikon designed".

@Bostedo: The raw "RGB" from the sensor isn't really RGB i.e. it isn't referenced to a set of standard color primaries in the output color space -- it's scene referenced. All raw convertors have to know the color response of the sensor to convert it to a given color space. A nice feature of DNG specification is this information (the matrix to do the conversion) is carried along in the RAW DNG. Other RAW file formats may or may not include it and the folks writing the convertor may have to experimentally derive it.

Posted by: Kevin Purcell | Thursday, 11 October 2012 at 01:41 PM

Dear Kevin,

You are not “rephrasing my finding;” I fear you may be getting it entirely wrong.

First, there is not a problem. There is merely the way things work. It is not possible to make a fixed choice of the exposure point relative to the total exposure range of the sensor, which is what you're asking for, without badly compromising image quality sooner or later. Even assuming you had agreement on what constitutes the best-looking image, that exposure point changes when you change the sensitivity, exposure range, or noise level of the sensor and the cameras processing circuitry. Those are constantly moving targets. If you had fixed the exposure point (and, by implication, the curved shape) five years ago, the results for today's cameras would be nowhere near optimal.

It's not a legacy problem. It's the opposite. If folks HAD fixed the parameters you want fixed, then we now would have a legacy problem.

Also, any RAW-displaying program that default shows camera photographs with approximately the correct exposure is implementing the corrections I talk about. The precise curve shape it is using to implement them depends on the design choices of the software's authors, but the software is taking the exposure point into account and creatingsome kind of a customized characteristic curve as a result.

It is most apparent when a RAW converter doesn't do that; the default rendering looks very, very bad. Some RAW converters do work like that; they provide the user a crappy-looking-but-unmassaged starting point and with the controls needed to create their own personal set of defaults that produce normal-looking photographs from their camera. There's nothing wrong with that, if some photographer wants to bother with that. Personally, I would rather start with an image that looks approximately correct and alter it from there, but either approach works. Neither is better than the other. And in the broad conceptual sense, both types are fundamentally doing the same thing–– constructing a customized rendering curve for that particular camera at that particular ISO.

In summary, this is not a flaw, this is not a defect in the Adobe software, and it is not a failure to establish a proper standard.

If you wish to argue about this on a more detailed technical level, it would be better to e-mail me privately. Mike prefers that these kind of debates take place off-stage.

pax \ Ctein

[ Please excuse any word-salad. MacSpeech in training! ]

======================================

-- Ctein's Online Gallery http://ctein.com

-- Digital Restorations http://photo-repair.com

======================================

Posted by: ctein | Thursday, 11 October 2012 at 02:45 PM

Dear Mike,

Hmmm, that would be doable, but it would be problematical. Y'see, What you're really talking about to present anything useful is showing the transfer curves, as I did in figure 1 (strictly speaking, you'd want a family of transfer curves for the different ISOs available in the camera). Personally, I would love to have that data in product reviews, it would save me time and work.

The thing is, there be a problem in agreeing on standards and I'm not actually sure how useful it would be in the end.

If you think back to film characteristic curves, they were a function not just of the film but of the development. For instance, TMAX 100 developed in Xtol had much more rolloff in the highlight portion of the curve than when you developed the film in D76 (where the curve stayed pretty straight and steep). The way most film makers dealt with this was to settle on a standard developer they'd use for their basic data sheets. It was usually something similar to D-76; in fact the ISO standard for determining film speeds specifies a particular developer that is not quite the same but very close to that.

There's a reason for that. Kodak was the 500 pound gorilla on the block, and D-76 was their standard developer.

So, the first thing you're going to have to do to start seeing such curves is to establish the standard developer for digital. Personally, I'd vote for Adobe Camera RAW, because I think it is the de facto standard, and it's what I use in my testing. But with all the turf wars and proprietary battles going on for digital dominance these days, folks wouldn't swallow that without a big fight.

A practical problem is that all of this is very much in flux. D-76 was kind of the best all-around developer for many many decades; others were optimized to be better at certain things, but until Xtol came along there was nothing that was objectively superior. That's absolutely not the case in RAW conversion; even within Adobe, Process 2012 not only has different controls from Process 2010 but it produces a somewhat different look in the resulting RGB image.

The reason I don't think it would be extremely useful is that if you're savvy enough to read and understand the transfer curves, then you're savvy enough to build your own custom ones and save them as preferences. That's why I was careful to specify that the curves in figure 1 were all made using my default ACR configuration, which is slightly different from Adobe's default, but not so much that the results are going to look seriously wonky to folks using those. I didn't do anything to mess with the curve shapes in my custom default… but I could have if I didn't like the ACR rendering.

pax \ Ctein

[ Please excuse any word-salad. MacSpeech in training! ]

======================================

-- Ctein's Online Gallery http://ctein.com

-- Digital Restorations http://photo-repair.com

======================================

Posted by: ctein | Thursday, 11 October 2012 at 03:07 PM

Good article, nice graphics especially the last (simulated one).

I use (all the time) the Olympus ISO-bracket feature which gives me three Jpegs which include the darkest and brightest portions of the discarded RAW file.

If the e-m5 has so much more highlight RAW headroom (which it can NOT demonstrate in a single jpeg) then it might just give me even more nice latitude when used with ISO-bracket ... mmm.

I may have a chance to find out tomorrow.

Posted by: Ulfric M Douglas | Thursday, 11 October 2012 at 03:58 PM

This is what I wrote years ago when I noticed something was strange with the Olympus E-30/E-620 cameras when they came out vs the previous generation. Everyone saying they had more highlight range than the E-3 but in truth the total DR is ball park same.

http://forums.dpreview.com/forums/post/31918699

I think it's good to know this for cameras that give you ISO 100 as an "extended" value vs ISO 200 as their nominal value, to know what happens to the DR (you lose 1 stop highlight at the ISO 100 but gain better shadow range).

Posted by: Ricardo | Saturday, 13 October 2012 at 08:57 AM